So called “4th Generation” object oriented languages have failed us.

It’s not their fault and they can do any task needed. But they’re not the best tool for any job.

The haughty days of the late 80s and early 90s gave us a slew of SmallTalk inspired languages. Objective C and C++ were early favorites, with Java and C# coming to dominate business development later. They were supposed to solve many problems that languages like C left hanging.

Quickly after they arrived, those problems didn’t matter in the vast majority of situations.

In practice, all of the “advantages” were cast aside due to naive beliefs stemming from the “development as engineering” movement dominated by Design Patterns.

Now, more than ever, modern distributed software architectures make these concepts pure overhead based on tradition.

Code Reusability

Object orientation was supposed to solve our code duplication problem. Every object was a software entity within itself. A black box that could be used in the rest of the program.

But this was based on a world where the vast majority of the functionality was contained within a single codebase. Even when they emerged into “real” development, this just wasn’t a reality. The vast majority of an application is not in the codebase, but in libraries used. The interfaces to these libraries are the “black box.”

Modern development stacks with dependency management make that experience gorgeous. Gone is the DLL hell of C development. In Go, Rust, Python, and even Java, I can recreate the entire application within the build and create a single reproducible artifact (assuming interpreters and tooling for some).

Go and others do an amazing job of providing guardrails to have public/private-like functionality to restrict developers to just the actual library interface and not the internals, but just being a reasonable human in languages like Python keeps a codebase sane.

Overloaded Object Model

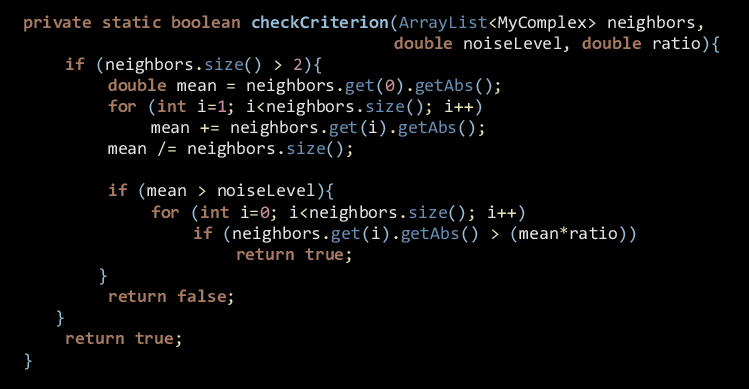

It started with the classical inheritance hierarchy. Then we added Interfaces. Then Abstract Classes. I can’t tell you the number of times I have seen these misused. I see codebases where “every class must have an Interface.” You know, in case you want to change something. The facilities allowed for so much “defensive programming” that more code is in boilerplate than in logic. Vestigial code is everywhere obfuscating the logic to be there “just in case.” I’ve never seen this pay off. If things change, inevitably the code has to change and a new class can’t just be dropped in the interface.

Interfaces are super-useful. But they’re useful when you know you can use them. Not when you think you might one day. I’ve used them to back data structures with a variety of dissimilar data sources: files, Redis, Postgres, etc. Or when you want to be able to abstract using multiple possible dependencies for the same functionality. I also use them in Go to deal with typing. But OO languages mix inheritance, abstract classes, and interfaces as all possible solutions to that problem.

I swear, I’ve never seen a useful abstract class in my career.

The practice of 1 class per file makes this a nightmare for maintainability or troubleshooting. You have to set up an IDE and use it’s tools to hop around the codebase and try to figure out what the heck it’s doing. Logic is practically never contained within a single class.

Generics, Reflection, Oh My!

I’ll just say this: I love Go. If my code compiles, it’ll run. And 95% of the time, the first thing that makes my text editor happy does exactly what I want it to. Strong, statically typed languages are beautiful for that.

But traditional OO languages added generics because sometimes being strongly typed is a pain in the butt, and Interfaces aren’t enough. This is great for library-like functionality. There is no reason to rewrite linked lists for every type. But within a “normal” codebase, they’re a trap. Now the compiler can’t tell when you done messed up.

Reflection. I love reflection. I’ve done terrible things with it. But it’s amazingly useful if you’re writing serialization/deserialization. I’ve made monitoring tools that could serialize data into multiple formats for consumption by multiple tools like Graphite, Prometheus, etc.

But in modern Java frameworks it’s gone way too far. You write a class, sprinkle some annotations or XML and your code is wired up for you. Poof!

But the combination of these has left these languages bereft of the advantages of being strongly and statically typed. You don’t discover problems until runtime. And it’s worse than Python or Ruby because the compiler/interpreter has 0 idea what’s happening to be able to produce useful error output.

I’ve never been a professional Java programmer. Probably 3 days a week I help some poor developer figure out how their app ended up working that they didn’t expect. I write Java to test systems built for Java developers or to recreate horrific production performance problems and security bugs.

Setters and Getters

This fall into the same “defensive programming” practice problems and not the language itself.

A massive percentage of the code of these languages is spent in the boilerplate of setters and getters. The languages were designed to have public and private attributes. But it was decided that these should never be used. You might need to attach logic to setting or getting and you don’t want that change to ripple through the codebase.

This almost never happens. When it does, methods could be added and consumers

could be fixed with sed or find/replace.

I have used Python’s backwards support of this by creating properties when I needed to make a change like that. That concept is extremely practical.

But even when you don’t drink this Kool-Aid, you’re stuck with it. Every framework expects it.

“This problem has been solved” you say.

- Your IDE can generate them for you! It shouldn’t have to, and the LOC are still sitting there. - Lombok! This is a disgusting hack. IDEs have had to create dedicated tooling to support this ball of reflection. - Kotlin! OK, I really thought it might be better. It’s not. I find it adds more net complexity than it reduces.

My point

There’s probably a lot more, but these issues are top of mind.

I think OO is a lot like IPv6. It solved the problems it meant to. But by the time it was ready for mainstream, those weren’t the main problems in software development.

Caveats

A caveat I need to add is that I really deal with 3 kinds of programming:

- Systems programming. Think OS, networking, embedded systems. 2. Integrations. Making one system talk to another. Like writing a tool for Prometheus to be able to scrape metrics from a database. Or writing things like terraform backends for internal infrastructure systems. 3. Business Development. The software that provides non-IT features for a business.

All of this may not apply in domains like video game programming. I found C++ very useful doing high performance computing in the late 90s/early 2000s where I used very little external code.

Also you can have good and bad software in any programming language. There is no “best”. When choosing you have to look at the problem you’re solving, the people you have, and if you have time for them to learn something new.

And you can be crazy like me and try to learn a new language every year or so just to exercise your brain. I’ve never written anything in a LISP for my job. But I’ve written Common LISP, Scheme, ELISP, Closure, etc. just for the experience. I also find that it helps me write better software in my primary languages.